🤥 Faked Up #16

TikTok's AI is summarizing conspiracy theories, Meta ran 222 ads for deepfake nudes, and Telegram apologizes to South Korea

This newsletter is a ~6 minute read and includes 45 links. I ❤️ to hear from readers: please leave comments or reach out via email.

THIS WEEK IN FAKES

Brazil’s Supreme Court unanimously upheld the ban on X (how we got here). Germany is exploring image authentication obligations for social networks. For election topics, all Google’s generative AI tools will now dodge to Search, while X’s Grok AI will now link out to Vote.gov. Awful people are using AI to make clickbait deepfakes about the rape and murder of a doctor in Kolkata.

TOP STORIES

TIKTOK’S CONSPIRACY SUMMARIES

Earlier this year, TikTok launched “Search Highlights,” an AI-generated summary of the result(s) for a specific query.

In a test I conducted on Monday, the feature was very helpful at summarizing conspiracy theories.

My search for [proof that earth is flat] returned a summary that accused NASA of putting out “fake CGI photos and videos” showing curvature in the Earth’s horizon. A search for [how to recognize reptilian people] helpfully informed me that “they walk very strangely” and “don’t blink.” And the Search Highlights for [pyramids built by aliens] informed me that “the construction of the pyramids remains a mystery.”

Mind you, TikTok is suggesting my underlying search queries for relatively broad terms like [flat earth], [pyramids] and [reptilians]. I’m not going to call this a rabbit hole, but it’s still a pretty efficient conspiracy theory-serving service.

SOUTH KOREA’S MAP OF SHAME

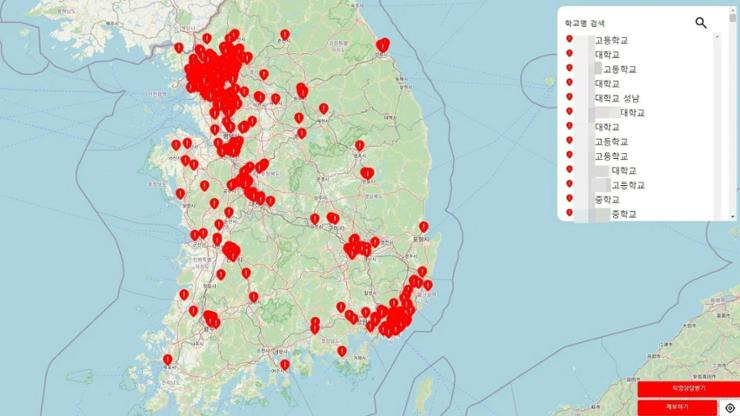

A viral map created by a high school freshman purports to show 500 educational South Korean facilities where students were targeted by deepfake nudes.

While the map’s creator did not independently verify the reports of deepfake abuse, other sources provide a sense of the scale of the issue in the country.

According to South Korean police, there have been 527 reports tied to illegal deepfake porn between 2021 and 2023.1 A survey organized by the Korean Teachers and Education Workers Union separately tallied more than 500 victims of “illegal synthetic material sexual crimes.” And an ongoing special operation to crack down on deepfake nudes led to 7 arrests this week. (Thanks a million to journalist Jikyung Kim for helping me find some of these stats).

Last week, I pointed to a Korean Telegram channel sharing deepfake nudes with more than 200,000 subscribers. Telegram is now being investigated to see whether it has been complicit in the distribution of this content (the platform appears to be in an unusually collaborative mood).

Also this week, a major K-pop agency said it was “collecting all relevant evidence to pursue the strongest legal action with a leading law firm, without leniency" against deepfake porn distributors targeting its artists.

This would be a welcome and overdue move that may materially affect websites like deepkpop[.]com, which posts exclusively deepfakes of K-pop stars and drew in 323,000 visitors in July2.

DeepKPop includes this infuriating disclaimer on its website: “Any k-pop idol has not been harmed by these deepfake videos. On the contrary we respect and love our idols <3 And just wanna be a little bit closer to them.”

AI UNDRESSERS EVERYWHERE

A few weeks ago, I found that Google Search was running 15 ads for AI undresser apps.

This week, I found that Meta ran at least 222 ads for five different tools that promise you can remove any clothes from an uploaded image.3

Each of these tools also has an app either on the App Store or the Play Store (or both). Here’s the full list:

Keep reading with a 7-day free trial

Subscribe to Faked Up to keep reading this post and get 7 days of free access to the full post archives.