Deepfake labels and detectors still don't work

🤥 Faked Up #33: Catholic page uses Google to spread unlabeled AI slop on Meta, Deepfake image detectors flop basic test, and Kent Walker thinks crowd-checking on YouTube shows potential

This newsletter is a ~6 minute read and includes 38 links.

HEADLINES

Donald Trump ordered his Attorney General to investigate federal interventions against misinformation in the past four years and recommend “remedial actions.” Douyin blocked more than 10,000 accounts pretending to be foreign “TikTok refugees.” Thailand’s Prime Minister received a call from a deepfaked foreign leader. GitHub is still hosting projects tied to deepfake porn. Misleading content was the second biggest source of user reports on Bluesky last year. Russian influence operation Doppelgänger ran ads worth $328,000 on Meta in the EU. Apple paused its AI news summarization feature that kept getting stuff terribly wrong.

TOP STORIES

This Catholic AI slop page loves Gemini

In October, I wrote about a network of Italian Facebook pages spreading Catholic clickbait and unrelated AI slop to tens of thousands of users.

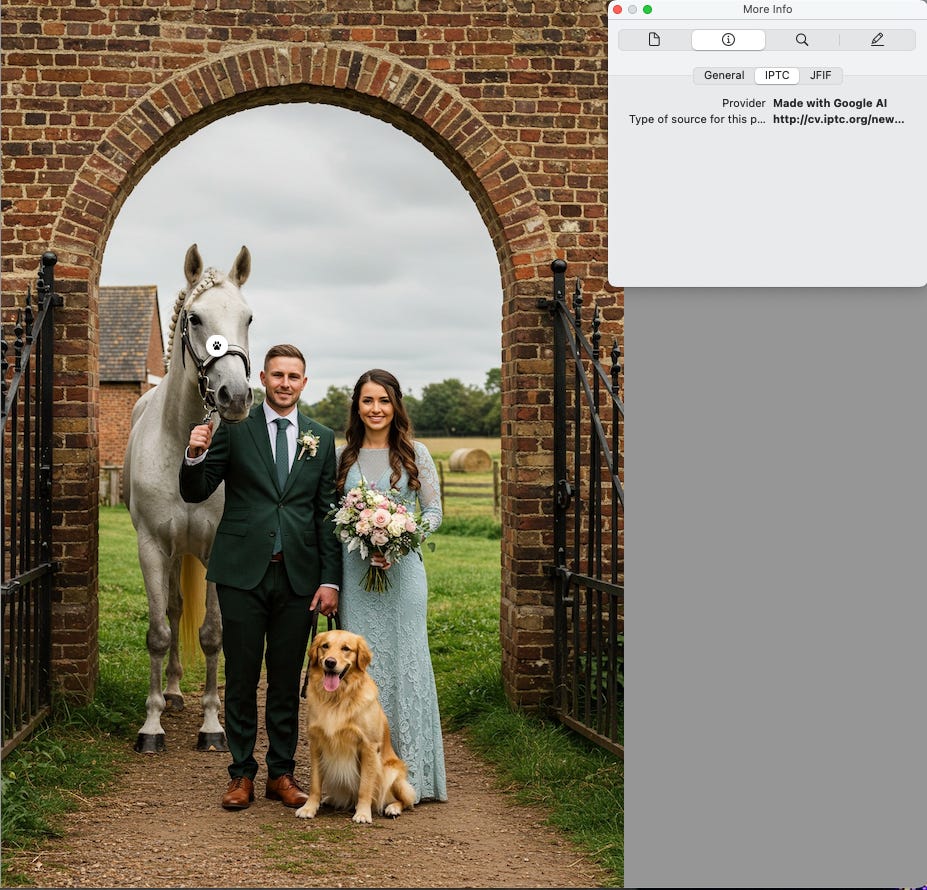

I checked in on the operation and noticed that its largest page, La luce di Gesù e Maria, appears substantially reliant on Google’s Gemini to create its synthetic spam. Take this bucolic image of newlyweds eliciting seemingly sincere reactions:

Humans and animals in the picture are deepfaked using Gemini. To its credit, Google is one of the companies that has agreed to add a marker of fakeness to the outputs of its AI-generating tools (more here — and see screenshot below).

Using this metadata, I found that La luce di Gesù e Maria used Gemini to generate 22 out of the 33 images published in January. Of the 108 images posted in the past month or so, 39 were created with Google’s AI. This increase may be due to how the metadata did and didn’t carry through based on how the photos were uploaded and can’t definitely prove an increased reliance on Google.

Using Gemini to create nonsensical images doesn’t violate any product policy (whether it’s aligned with Google’s mission or the long term health of its core product is another question).

Facebook’s failure to label images that carry Google’s metadata breaks a promise it made in February 2024 to build “industry-leading tools that can identify invisible markers at scale – specifically, the “AI generated” information in the C2PA and IPTC technical standards – so we can label images from Google, OpenAI, Microsoft, Adobe, Midjourney, and Shutterstock as they implement their plans for adding metadata to images created by their tools.”

I reached out to Meta for comment and was pointed back to the February 2024 blog post statement that “it’s not yet possible to identify all AI-generated content“ — but wasn’t given a reason for the lack of labels on images with Google’s metadata.

AI detectors keep promising more than they can deliver

Worry not, though, because the industry is flush with deepfake detectors that will make spotting AI slop at scale extremely easy. Right? Wrong.

Take AIorNot, which raised $5M last week and claims to have 200,000 clients for its AI image detector. Or if you prefer, use Winston AI, which claims a “99.98% accuracy rate” (h/t Libération journalist Enzo Quenescourt) and has been featured on The New York Times, Wired, The Guardian and more.

Keep reading with a 7-day free trial

Subscribe to Faked Up to keep reading this post and get 7 days of free access to the full post archives.