🤥 Faked Up #5

Israeli campaign targets US legislators, disinfo agents try to game fact-checkers, and a news wire is hacked.

Howdy!

Before you dive in, please note that a story about India’s fact-checking tip lines linked in last week’s issue was retracted.

Faked Up #5 is brought to you by Blinky the Three-Eyed Fish and fake books about possibly real UFOs. The newsletter is a ~6-minute read and contains 38 links.

Top Stories

[With friends like these] The New York Times attributed to the Israeli government an influence operation using ChatGPT, X and Meta to target US elected officials. The campaign used “hundreds of fake accounts that posed as real Americans” to reach members of Congress, “particularly ones who are Black and Democrats” with content recommending continued funding to Israel’s armed forces. The campaign reportedly cost $2M and was run by the political marketing firm Stoic. Last week, OpenAI and Meta both took action on the firm’s accounts.

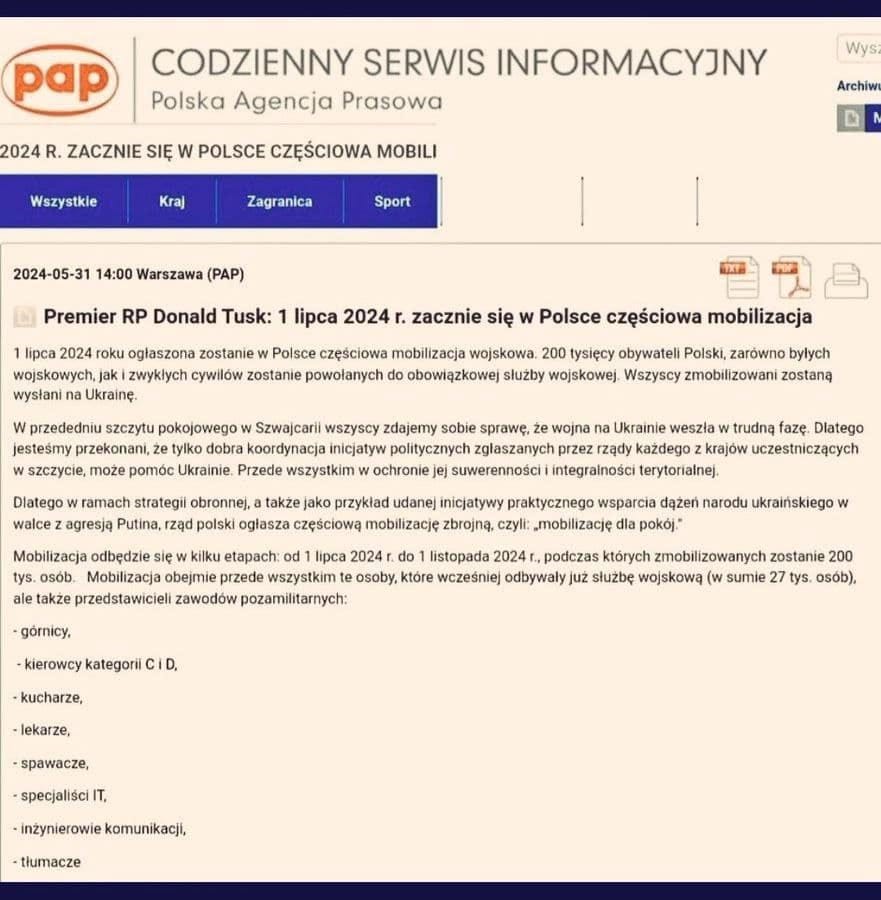

[PAP, smeared] Poland’s state news agency PAP was hacked. The agency ran (twice) a false report claiming Prime Minister Donald Tusk had ordered the mobilization of 200,000 men in response to the war in Ukraine. Tusk’s government blamed the hack on Russia, which denied involvement. Local fact-checkers Demagog note the false story was swiftly retracted and easily debunkable, but at least a handful social media accounts amplified it anyway.

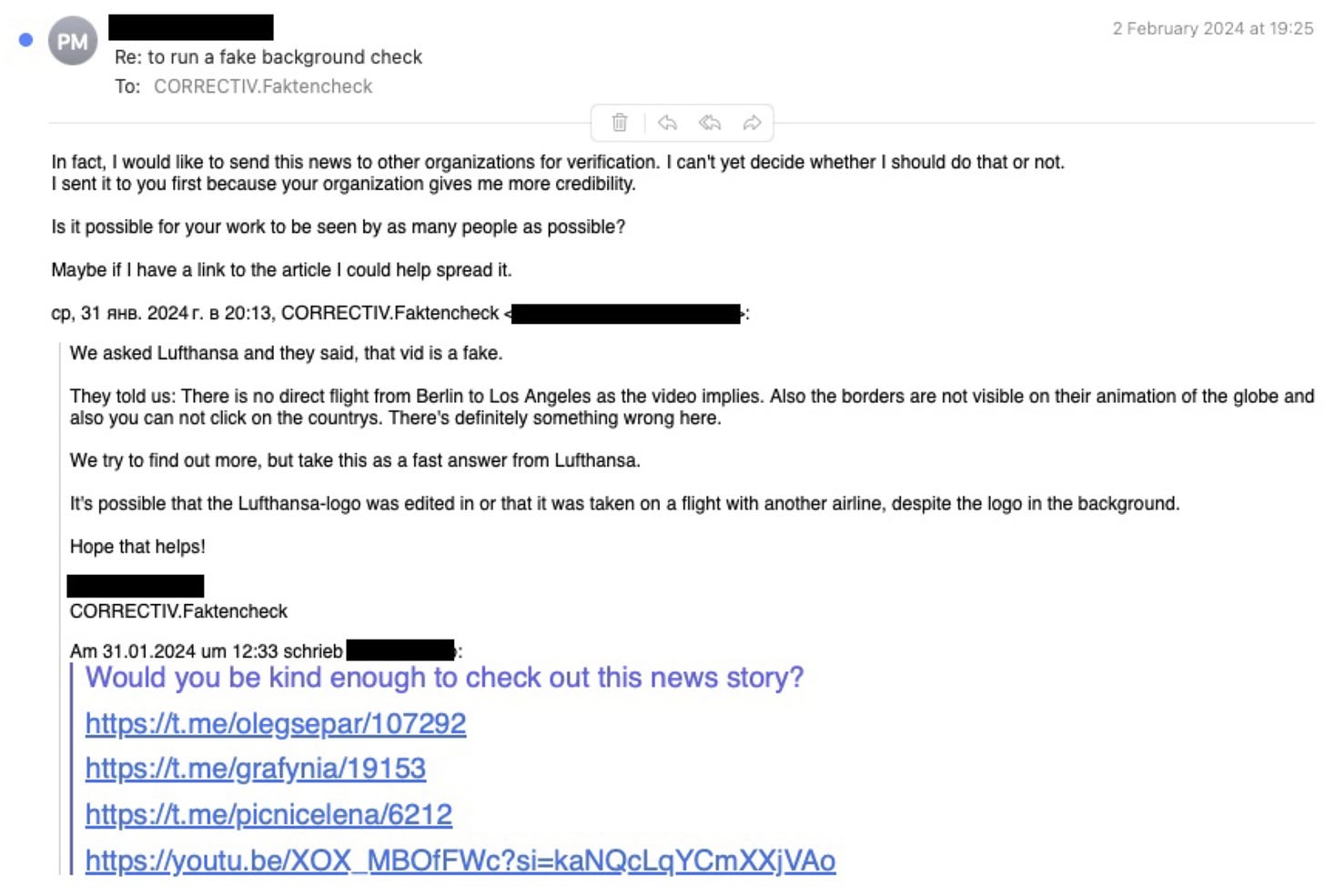

[Fishing for fact checks] Analysts at Check First and Reset Tech uncovered a peculiar pro-Russian influence operation dubbed “Operation Overload.” Like many others, the network posted falsehoods on Telegram and X.

More surprisingly, the operation then used Gmail and X accounts posing as concerned citizens to email more than 800 fact-checkers and newsrooms to ask them to verify the fakes. Check First speculates that Operation Overload aimed to bog down fact-checkers with superfluous work and draw more attention to its fake narratives through the resulting debunks.

Two things bolster this second hypothesis, which will be familiar to readers of media scholar Whitney Phillips. First, emails like the one below where a sender affiliated with the network is very keen for fact-checkers to publish their findings.

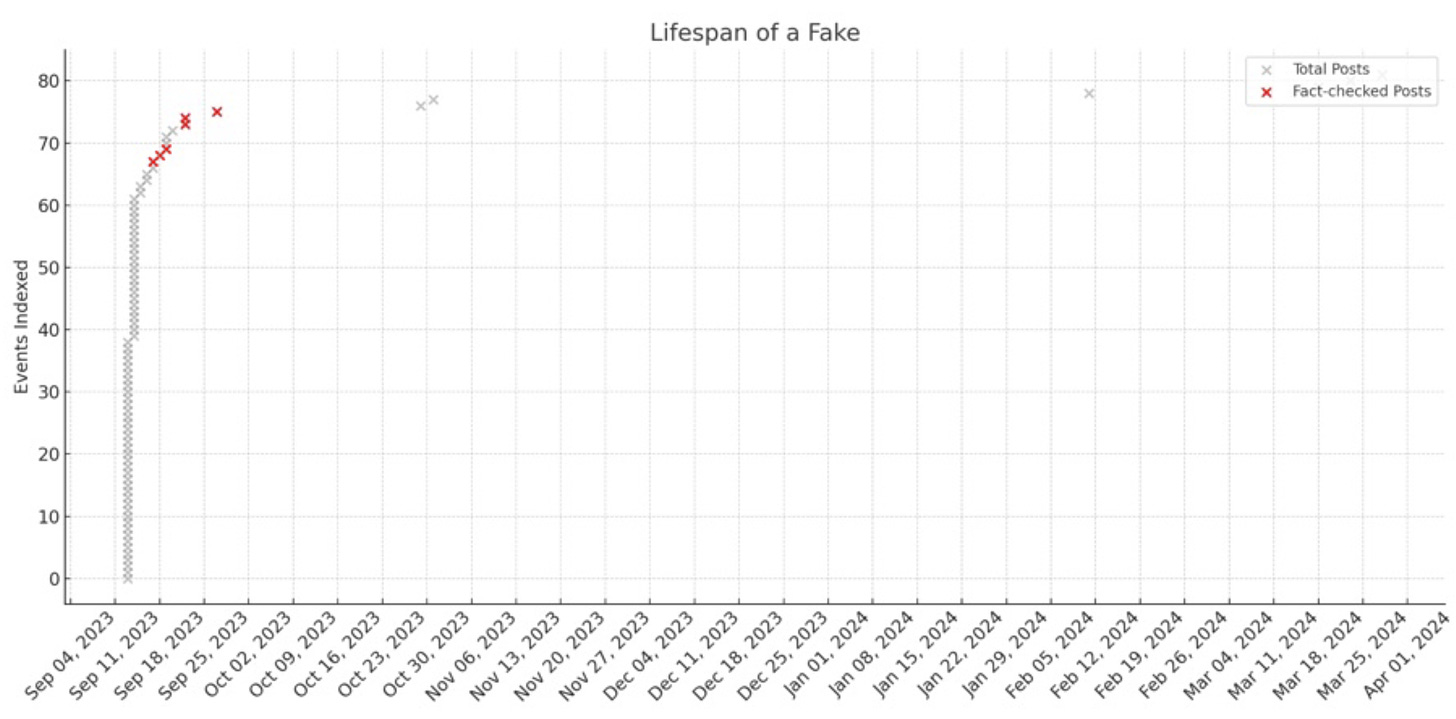

Second, the lifespan of one particular fake, which was re-posted in quick succession until the relevant fact check was published, then abandoned (this bit feels more speculative to me, unless it’s consistent across all fakes in the sample).

While most of the fakes took the form of manipulated videos falsely attributed to media outlets like the BBC or Euronews, there were also more than 40 likely doctored photos of graffiti like the one below.

[Allusions travel faster than lies] Lots to unpack in this clever new study on Science.

Researchers at MIT and Penn assessed the impact of COVID-19 vaccine-related headlines on Americans’ propensity to take the shot. Then, they built a dataset of 13,206 vaccine-related public Facebook URLs that were shared more than 100 times between January and March 2021. Finally, they used crowd workers and a machine-learning model to attempt to predict the impact of the 13K URLs on vaccination intent.

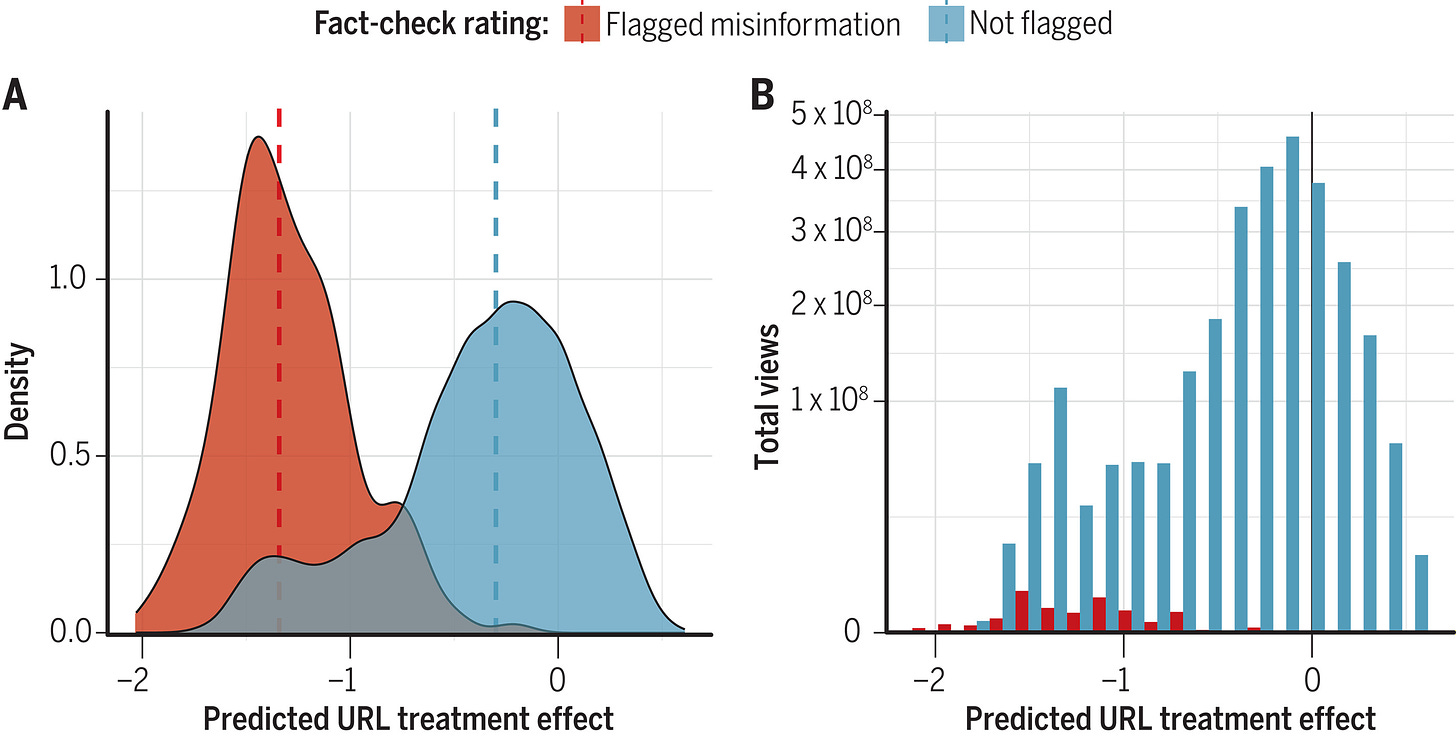

That’s a lot to digest, but the graph below does a great job at delivering most of the results. On the left side you can see that the median URL flagged as false by Facebook’s fact-checking partners was predicted to decrease the intention to vaccinate by 1.4 percentage points. That’s significantly worse than the 0.3 decrease from the median unflagged URL.

But there’s a catch. Unflagged articles with headlines suggesting vaccines were harmful had a similarly negative impact on predicted willingness to jab — and were seen a lot more. Whereas flagged misinformation received 8.7 million views, the overall sample of 13K vaccine-related URLs got 2.7 billion views.

There are two takeaways for me here:

Keep reading with a 7-day free trial

Subscribe to Faked Up to keep reading this post and get 7 days of free access to the full post archives.