🤥 Faked Up #19

Bombay High Court strikes down India's government fact-checking, Pixel's "Reimagine" lights the UN on fire, and X's NCII takedown requests get audited

This newsletter is a ~7 minute read and includes 43 links.

THIS WEEK IN FAKES

Most Americans are worried about AI election fakes. Global experts don’t love what generative AI is doing to the information environment. Singapore is considering a law giving election candidates an avenue to flag deepfakes. MrReagan (see FU#11) is suing California over its new deepfake laws. South Korean police committed to spend $2M US dollars on detectors for deepfake audio and video. Canada’s foreign interference committee held another round of public hearings. TikTok deplatformed Russian state media.

TOP STORIES

FACT-CHECKING: NOT FOR GOVERNMENTS

The Bombay High Court struck down the powers of the Fact-Checking Unit (FCU) of the Indian Government. In a tie-breaker vote, justice A.S. Chandurkar ruled that the unit — first established in 2019, but given powers to request removal of false content online about the government earlier this year — was unconstitutional. Chandurkar argued that the law defined what counts as misleading too vaguely and that it was inappropriate for the government to serve as a “final arbiter in its own cause.”

Jency Jacob, Managing Editor of BOOM Fact Check, told me the decision was a “welcome step,” adding that fact check units by governments are “a dangerous trend where politicians are trying to appropriate fact checking due to the credibility built in the minds of the citizens thanks to the work done by independent fact checkers.”

Jacob thinks governments have “other tools at their disposal” to correct misinformation and should “leave fact checking to independent newsrooms who are trained journalists and follow non-partisan processes.”

AUDITING X

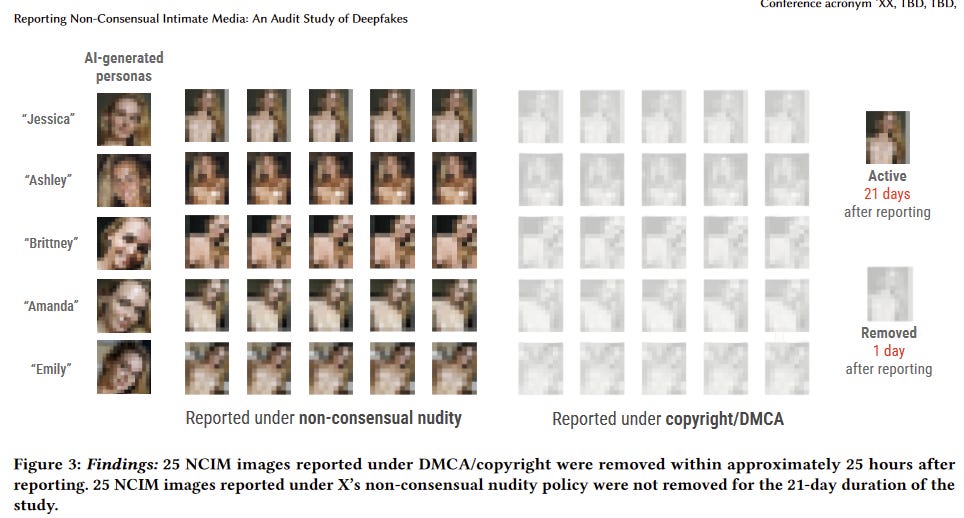

In a preprint, researchers at the University of Michigan and Florida International University tested X’s responsiveness to takedown requests for AI-generated non consensual intimate imagery.

The study created 5 deepfake nudes for AI-generated personas and posted them from 10 different X accounts. They then proceeded to flag the images through the in-platform reporting mechanisms. Half were reported to X as a copyright violation and the other half as a violation of the platform’s non consensual nudity policy. While the copyright violations were removed within a day, none of the images flagged as NCII had been removed three weeks after being reported.

DEEPFAKE FACTORY

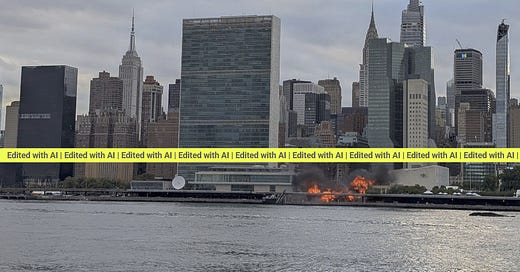

I got my hands on a Pixel 9 this weekend and played around with Reimagine, its AI-powered image editing feature (see FU#15). Because it’s the United Nations General Assembly this week, I thought I’d try the feature on a picture I took of the UN building.

It was extremely easy — even for someone like me whose visual editing skills peak at Microsoft Paint — to add all kinds of problematic material into the photo. You simply circle the part of the photo you want to “reimagine” in the Photos app, type in a prompt, and then pick from one of four alternatives of the edited photo.

With very little effort, I was able to do some serious damage to the UN’s façade:

Keep reading with a 7-day free trial

Subscribe to Faked Up to keep reading this post and get 7 days of free access to the full post archives.