Buongiorno a tutti.

Faked Up #9 is brought to you by the end of take-home exams and the word “delve.” The newsletter is a ~6-minute read and contains 51 links.

Update: Last week I wrote about Meta’s messy “Made with AI” labels. On Monday, the company announced it was rebranding them to “AI info.” That’s much better in that it is less accusatory, but it doesn’t fix the underlying limitations of the feature as a way to combat deceptive use of generative AI.

Top Stories

PSYCH! AN AI WROTE THIS — Psychology researchers at the universities of Reading and Essex tested the proposition that generative AI can be reliably used to cheat in university exams. The answer is a resounding Yes.

The researchers used GPT-4 to produce 63 submissions for the at-home exams of five different classes in Reading’s undergraduate psychology department. The researchers did not touch the AI output other than to remove reference sections and re-generate responses if they were identical to another submission.

Only four of the 63 exams got flagged as suspicious during grading, and only half of those were explicitly called out as possibly AI-generated. The kicker is that the average AI submission also got a better grade than the average human.

The study concludes that “from a perspective of academic integrity, 100% AI written exam submissions being virtually undetectable is extremely concerning.”

Students aren’t going to be the only ones delegating work to the AI, either. A group of machine learning researchers claim in a preprint that as many as 10% of the abstracts published on PubMed in 2024 were “processed with LLMs” based on the excess usage of certain words like “delves.” Seems significant, important — even crucial!

PILING ON — Two posts falsely claiming that CNN anchor Dana Bash was trying to help U.S. President Joe Biden focus his attention on his adversary Donald Trump during the debate got more than 11M views on X1 (h/t AFP Fact Check). The posts rely on a deceptively captioned clip that originally appeared on TikTok. As you can see starting at around 20:20 on the CNN video, Bash does point her finger while looking sternly at a candidate.

But given that her head is tilted left — and co-host Jake Tapper is looking to his right while posing a question to Biden — she is clearly signaling to Trump to pipe down.

I share this silly little viral thing because it looks like an other case of what Mike Caulfield would call misrepresented evidence. Biden did perform terribly at the debate, but this clip wasn’t proof of it.2

NOT OK — In a recent preprint, researchers at the University of Washington and Georgetown studied the attitudes of 315 US respondents towards AI-generated non consensual intimate imagery (AIG-NCII). They found that vast majorities thought the creation and dissemination of AI-generated NCII was “totally unacceptable.” That remained true whether the object of the deepfake was a stranger or an intimate partner, though there was some fluctuation based on the intent.

In an indication that generalized access could normalize AIG-NCII, respondents were notably more accepting of people seeking out this content.

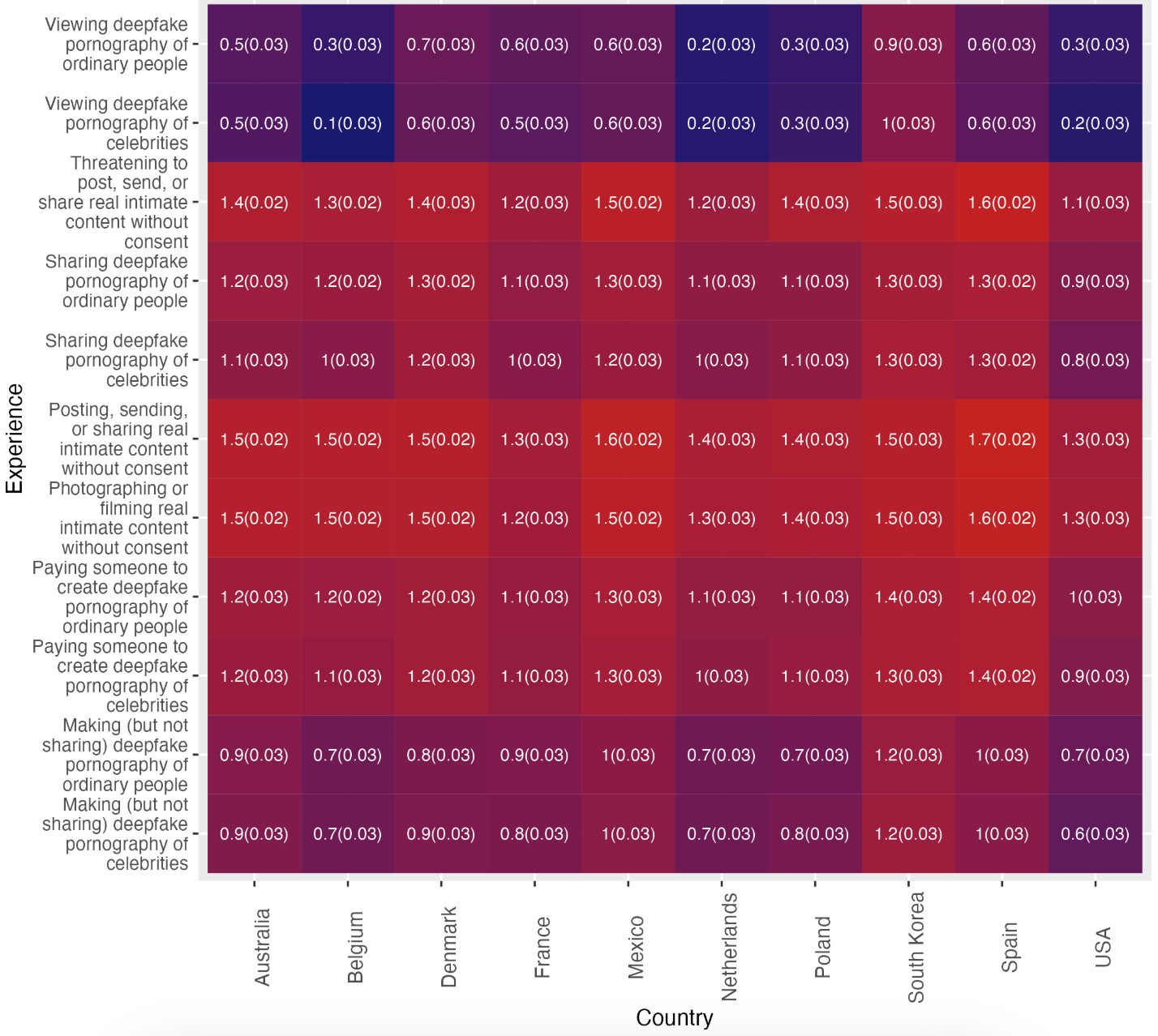

Earlier this year, a separate group of researchers across four institutions shared the results of a mid-2023 survey of 1,600 across 10 countries that found a similar distinction between creation/dissemination versus consumption. In the chart below on a scale from -2 to +2 you can see the mean attitude towards criminalizing the behaviors listed on the left column. (The redder the square, the more people favored criminalization.)

While I’m sharing papers on the topic: In another recent preprint, researchers at Google and UW reviewed 261 Reddit posts providing advice on image-based sexual abuse (IBSA), including 51 that were related to a synthetic image. Most co-occurred with sextortion.

SOURCE OF CONFUSION — Citations continue to stump AI chatbots.

Nieman Lab claims that ChatGPT hallucinated the urls of articles by at least 10 different publications with which OpenAI has partnership deals.

AI detection company GPTZero claims that Perplexity is increasingly returning AI-generated sources, which may negate the search engine’s effort to resolve hallucination by referring to things it found on the internet.

Dutch researcher Marijn Sax thinks that the made-up citations in a scholarly article about labeling AI-content were…likely AI-generated.

Inspired by this mess, I asked three AI chatbots to give me urls to 10 articles I’ve written about fact-checking (there are dozens). ChatGPT passed the test. Gemini claimed not to have enough information “about that person” (rude). And Perplexity AI claimed it couldn’t find any articles I wrote and just posted a press release about my move to the Poynter Institute 10 times.

Keep reading with a 7-day free trial

Subscribe to Faked Up to keep reading this post and get 7 days of free access to the full post archives.