What’s up, y’all?

Faked Up #4 is brought to you by glue-free pizza and 100% innocent fork-bending magicians. The newsletter is a ~7-minute read and contains 61 links.

Top Stories

[AI Overmews] Last week’s launch of AI-generated summaries on the US-English version of Google Search did not go great. The feature told people to put glue on pizza, eat rocks, and stare at the sun. It claimed JFK graduated the University of Wisconsin 6 times, most recently in 1993.

More worryingly, AI Overviews misidentified poisonous mushrooms, botched the remedies to a rattlesnake bite and claimed Barack Obama is Muslim.

Some of this is down to the way queries were formulated. Like an artificial puppy, AI Overviews eagerly returned stuff that matched bizarro queries even if the source was Reddit maestro fucksmith or satirical newspaper The Onion.

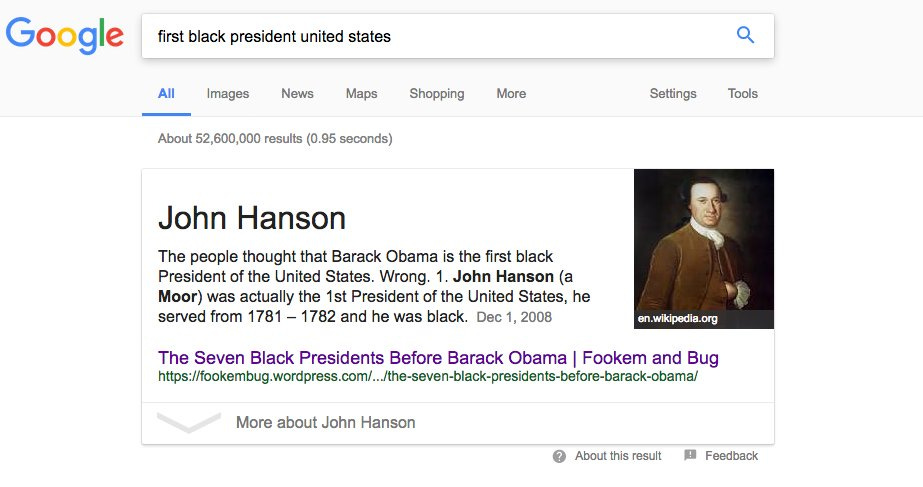

This is not a new problem. Seven years ago I wrote about “featured snippets,” Search’s longstanding summarization experience, struggling with a query about the first Black president of the United States.

AI struggled with a similar query last week, despite being reportedly bigger than fire.

To assess AI Overviews beyond one-off gaffes, though, I thought I’d explore multiple queries on a specific user journey.

I looked for a topic seemingly well-attuned to the triggering logic of AI Overviews, which appeared to rely heavily on forum content and prioritize “how-to” responses. The topic also had to be one where online content is of mixed quality and getting the wrong result could lead to somewhat harmful consequences.

I settled on a teenage boy looking for information about “looksmaxxing,” a catch-all buzzword for beauty practices. These range from basic hygiene to aggressive methods that verge into body dysmorphia like under-eating, steroids and surgery.

One of the most popular elements of looksmaxxing is “mewing,” which is not something a cat does, but a tongue posture that allegedly makes your jawline more masculine. The method is named after a controversial father-son duo of British orthodontists. It is not widely endorsed; in a memorable turn of phrase, the American Association of Orthodontists (AAO) says that “scientific evidence supporting mewing’s jawline-sculpting claims is as thin as dental floss.”

So, how did AI Overviews handle a mewing-curious teen1? The result for [looksmaxxing techniques] was a mixed bag, highlighting a questionable app that rates your masculinity based on a selfie while noting that mewing is “not scientifically supported but is said to improve jaw structure.”

[best mewing techniques for 16 year old boy] went straight into how-to mode and recommended “20-30 minutes” of mewing a day.

[benefits of mewing] was a bit better, though I don’t love the both sides approach that opens with “some people” endorsing a range of benefits and only after a scroll flags what experts have said.

Something similar occurs for [does mewing work at 13], though the warning here was attributed and clear.

AI Overviews also went deep on product recommendations for [best mewing products].

Finally, I was able to trigger a few responses for bone smashing, which is generally assumed to be a troll rather than something looksmaxxers actually do.

I shared all these looksmaxxing results with David Scales, a physician, sociologist and assistant professor at Weill Cornell Medicine. First, he noted that this is a lightly researched topic which doesn't lend itself to empirical study. Mewing is likely low risk, but also low certainty.

The bigger question, according to Scales, is "how should AI provide guidance on behaviors in the setting of scientific uncertainty?" In an "evidence-free zone" like mewing, specialty societies like the AAO typically avoid making recommendations. The AI Overviews, instead, "seem to be both-sidesing it."

And this, I think, is the crux of the matter.

Many AI Overviews bugs were embarrassing but fixable. The plan for a fucksmith-free product appears to be to turn up the dial on source quality and show the feature less.

The deeper issue is how sources are combined in an intelligible whole. Rather than “purée results,” Google’s AI summaries should help users understand the relative trustworthiness of the sources behind a topic.

That is Google’s killer app. That’s what its awesome Search quality teams are set up to deliver. Everything else is just looksmaxxing.

Keep reading with a 7-day free trial

Subscribe to Faked Up to keep reading this post and get 7 days of free access to the full post archives.