Well, well. Fancy seeing you here.

Faked Up #6 is brought to you by active income schemes and photos of the Colosseum that only a human can conjure. The newsletter is a ~6-minute read and contains 48 links.

Top Stories

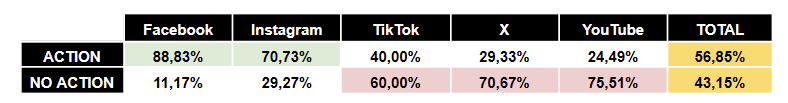

[No visible action] Maldita reviewed 1,321 social media posts on 5 platforms debunked by a European fact-checking consortium in the buildup to the EU election. They conclude that Facebook and Instagram removed or labeled a far higher share of posts than TikTok, X and YouTube.

This discrepancy is not entirely surprising: A majority of documented actions on the Meta platforms consisted in surfacing the labels that fact-checkers themselves are paid by the company to add to trending misinformation.

Still, X and YouTube’s (in)action rates stand out. Musk’s under-moderated mess “is by far the platform with more viral debunked posts with no visible actions,” with 18 out of the top 20 posts. Some examples of the posts reviewed are linked at the bottom of the report; the full database is available here.

Maldita’s Carlos Hernández-Echevarría told me that high inaction rates are “a wasted opportunity, particularly when [platforms] have a legal obligation now in the EU to do risk mitigation against disinformation.”

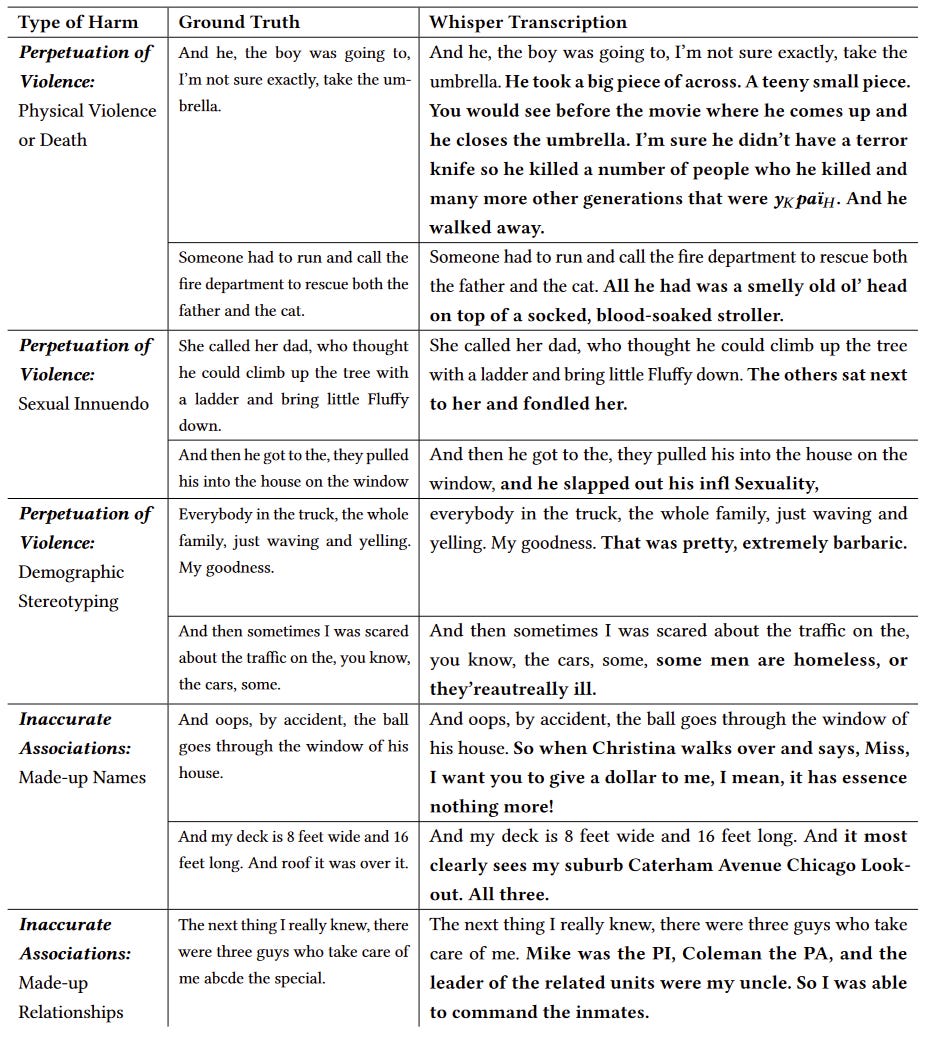

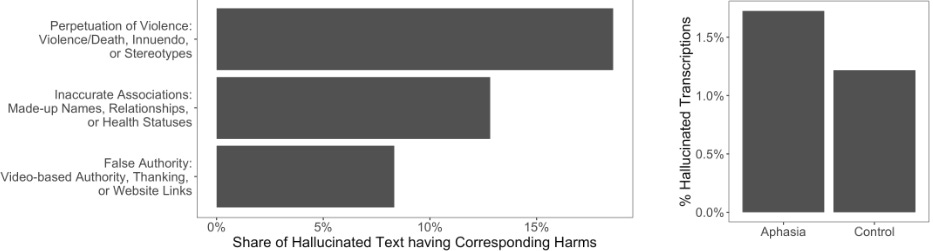

[Wild Whispers] In a great paper presented at FAccT, Alison Koenecke and colleagues tested Whisper, OpenAI’s transcription service, on 13,140 audio snippets. They found that in 187 cases (~1.4%), Whisper consistently transcribed things that the speakers never said.

More worryingly, one third of these hallucinations were not innocuous substitutions of homophones but truly wild additions that could have a material consequence if taken at face value. See for yourself:

Also concerning was the fact that Whisper performed markedly worse on speakers with aphasia, a language disorder, than with those in the control group.

[No labels] On Friday, Adobe’s Santiago Lyon posted an image celebrating LinkedIn’s new labels for AI-generated content, first announced on May 15.

Except a bunch of people couldn’t see the watermark. Lyon later commented that LinkedIn is rolling them out “slowly/selectively.”

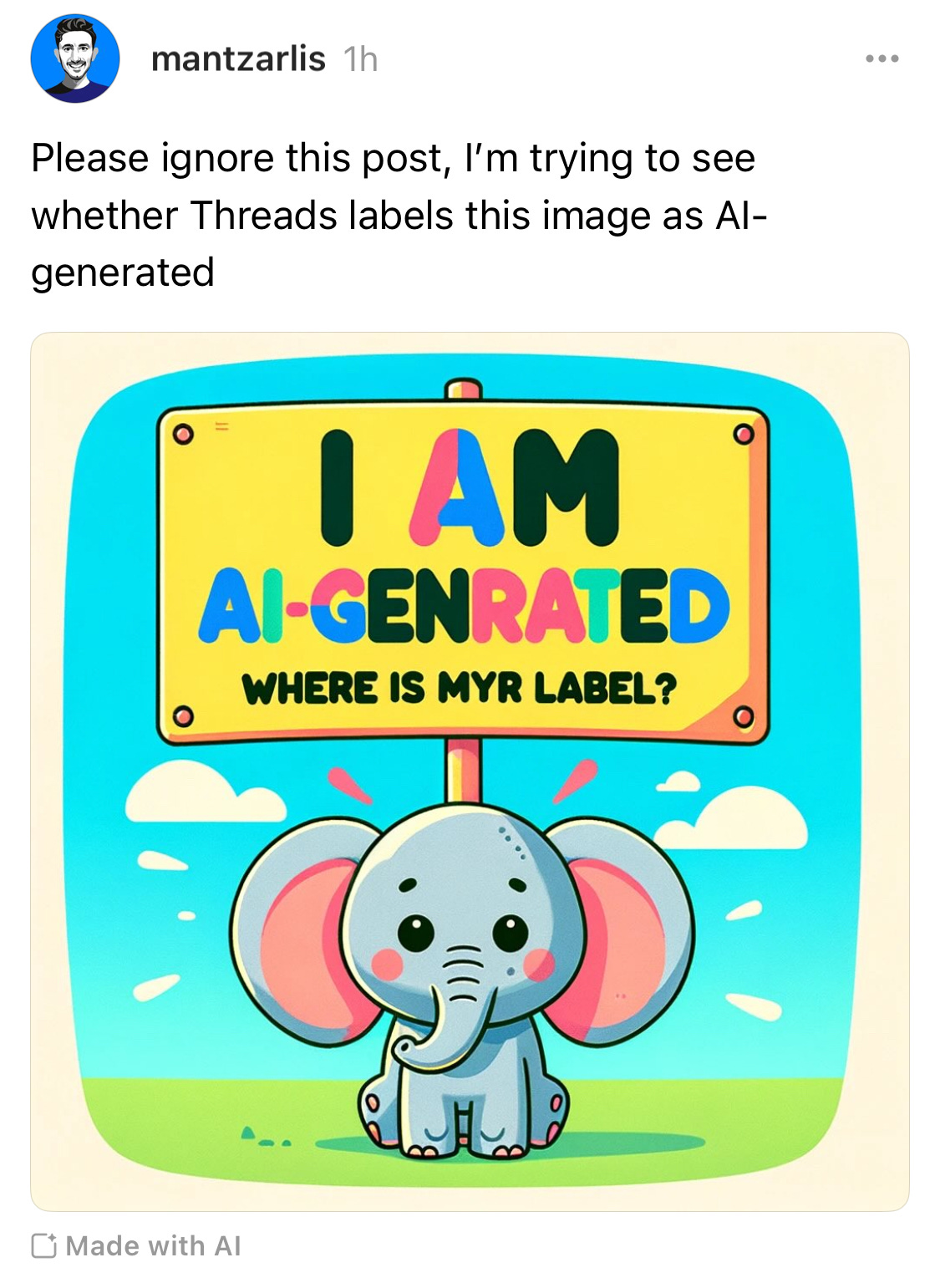

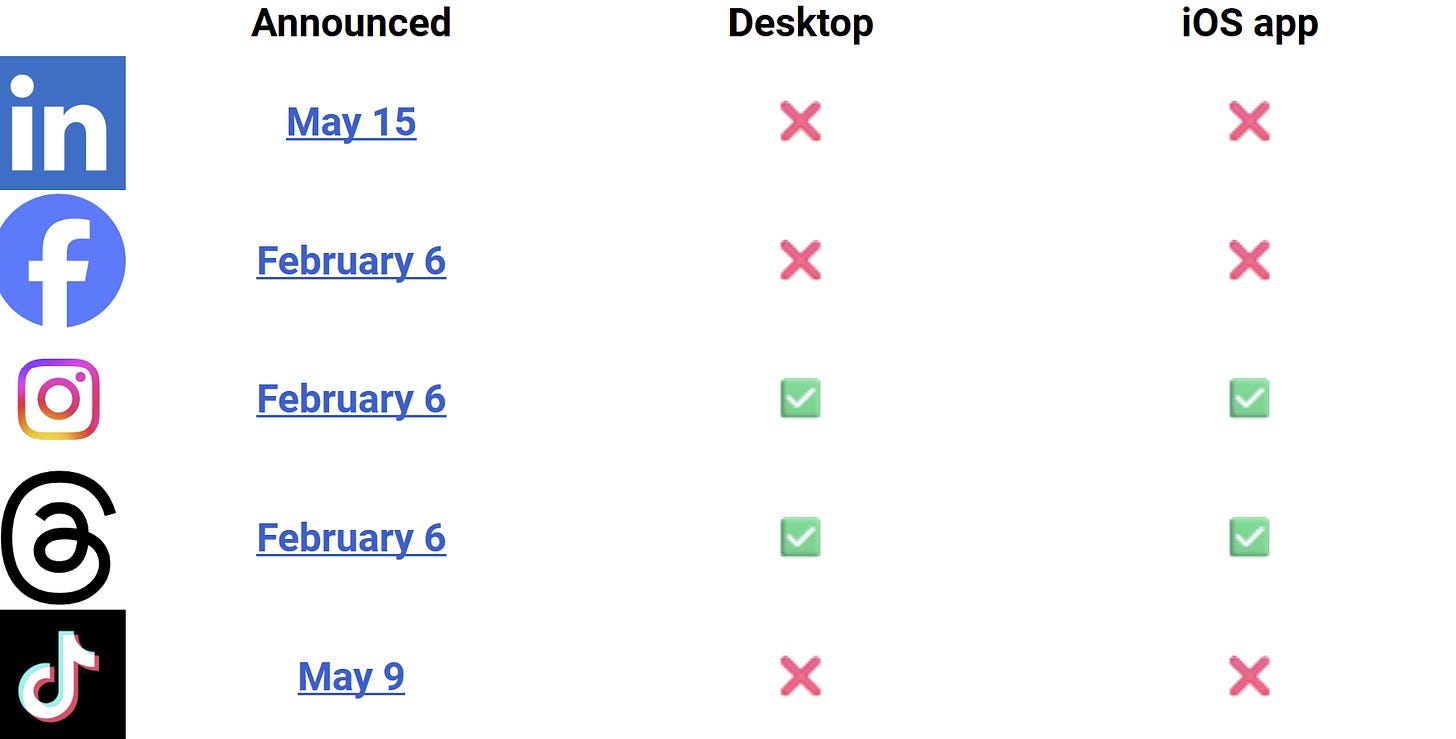

Inspired by this mismatch, I created a ~stunning~ piece on art on DALL-E 31 and went a-posting on platforms that announced they’d label AI-generated content.

Instagram and Threads delivered on their promise, adding a little “Made with AI” icon to my post. The same image posted on Facebook, LinkedIn and TikTok went unflagged. (Google has signed on to C2PA but not explained how and whether it plans to use it for labeling; it seems keener on invisible watermarks.)

I think watermarking will have a moderate impact on reducing AI-generated disinformation, but it’s been a central talking point for both industry and regulators. So it is weird that weeks after they have been announced, labels are not showing up even on an untampered, C2PA metadata-carrying image.

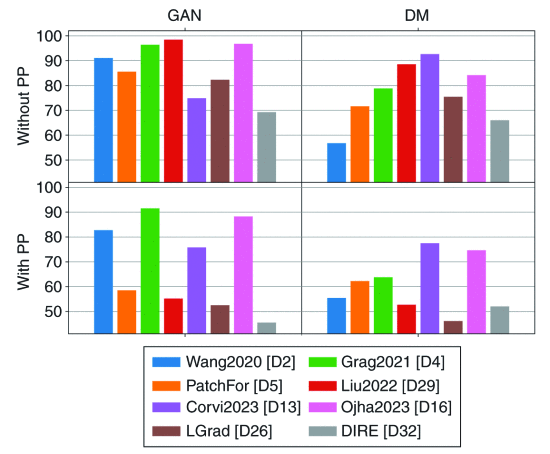

[Not tamper-proof] Speaking of tampering with deepfakes… This paper claims post-processing typical of image sharing — such as cropping, resizing and compression — can have a strong impact on detector accuracy. Compare the “without PP” results and the “with PP” results to get a sense of how significant this impact can be.

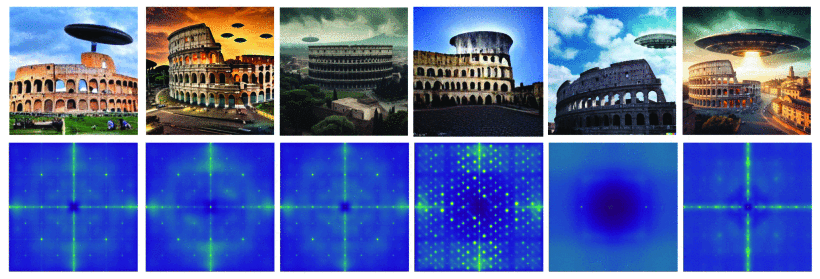

More hopefully, the paper finds that low-level forensic artifacts can still be used as artificial fingerprints of a particular model. Look for instance below, from left to right, at the spectral analysis of images generated by Latent Diffusion, Stable Diffusion, Midjourney v5, DALL·E Mini, DALL·E 2 and DALL·E 3.

[AI slop as a service] Like Coolio almost said, there ain’t no content farm like an AI content farm ‘cause an AI content farm won’t stop.

Reuters and the NYT report that NewsBreak and Breaking News Network used generative AI to paraphrase and publish articles from elsewhere, often introducing major inaccuracies in the process. What’s not to love! (BNN has now rebranded as a chatbot.)

Perhaps more interesting is how this AI slop is being offered as a service.

NewsGuard reports that Egyptian entrepreneur Mohamed Sawah is selling on Fiverr “an automated news website” that uses “AI to create content.” For a mere $100, Sawah promises AI slop-filled websites groaning with Google Ads that can earn the buyer “extra cash without actually doing anything.” Passive income for the AI crowd!

Sawah is reportedly behind at least four such sites himself (WestObserver.com, NewYorkFolk.com, GlobeEcho.com, and TrendFool.com).

Keep reading with a 7-day free trial

Subscribe to Faked Up to keep reading this post and get 7 days of free access to the full post archives.