🤥 Faked Up #12

Google is running ads for undresser services, the world is seeking fact checks about Imane Khelif, and AI blends academic results with "some people's" beliefs about sleep training.

This newsletter is a ~7 minute read and includes 44 links.

TOP STORIES

STILL MONETIZING

Google announced on July 31 new measures against non consensual intimate imagery (NCII). The most significant change is a new Search ranking penalty for sites that “have received a high volume of removals for fake explicit imagery.” This should reduce the reach of sites like MrDeepFakes, which got 60% of its traffic from organic search in June1 and has a symbiotic relationship with AI tools like DeepSwap that fuel the production of deepnudes.

This move is very welcome and I know the teams involved will have worked hard to get it over the finish line.2

There is much more the company can do, however. The sites that distribute NCII are only half of the problem. The “undresser” and “faceswap” websites that generate non consensual nudes are mostly unaffected by this update, because they don’t typically host any of the non consensual deepnudes that their users create.

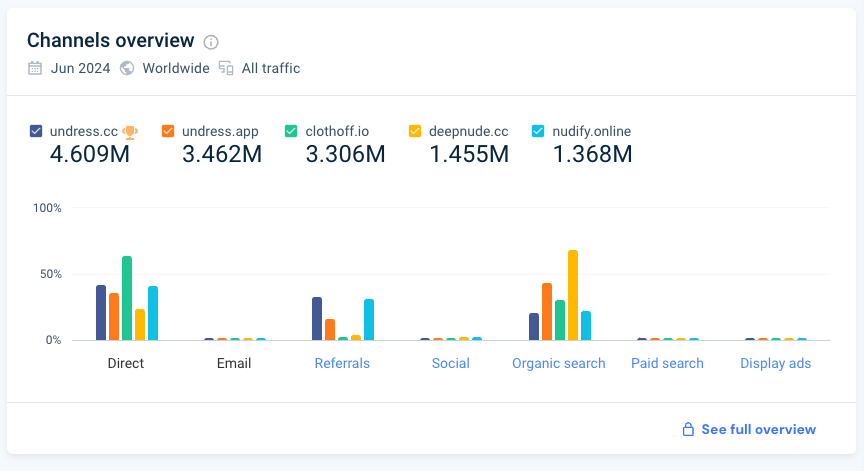

And these sites are also getting millions of visits from Search:3

It is these undressers that are at the center of Sabrina Javellana’s heart-wrenching story. In 2021, Javellana was one of the youngest elected officials in Florida’s history when she discovered a trove of deepnudes of her on 4chan. I urge you to read the New York Times article on how this abuse drove her away from public life.

Google is not yet ready to go after undressers in Search (a mistake, imo). But it has promised to ban all ads to deepfake porn generators.

Unfortunately, it is breaking that promise. Over the past week, I was able to trigger 15 unique ads while searching for nine different queries4 related to AI undressing. Below are three examples (ads are labeled “sponsored”):

The ads point to ten different apps or websites. As is typical for this ecosystem of AI-powered grifting, these tools offer a variety of text- and image-generation services and it’s hard to tell the extent to which their dominant use cases is always NCII.

This is the case of justdone[.]ai, which appears to have run hundreds of Google Ads. Most of these were for legitimate use cases, but they included a couple advertising an “Undresser ai” (as well as two ads for AI-written obituaries, which have previously gotten Search into trouble).

And deepnudes are clearly a core service for several of the sites I found advertising with Google, including ptool[.]ai, which promises that “our online AI outfit generator lets you remove clothes” and mydreams[.]studio, which has run at least 15 Google ads.

MyDreams suggests female celebrity names like Margot Robbie as “popular tags” and gives users the option to generate images through the porn-only model URPM.

Google is not getting rich off of these websites, nor is it willfully turning a blind eye. These ads ran because of classifier and/or human mistakes and I suspect most will be removed soon after this newsletter is published. But undressers are a known phenomenon and their continued capacity to advertise on Google suggests that teams fighting this abuse vector should be getting more resources (maybe they could be reassigned from teams working on inexistent user needs).

And to be clear, Google is not alone. One of the websites above was running ads on its Telegram channel for FaceHub:AI, an app that is available on Apple’s App Store and promises you can “change the face of any sex videos to your friend, friend’s mother, step-sister or your teacher.” Last week, Context found four other such apps on the App Store advertising on Meta platforms.

BEHIND THE SLOP

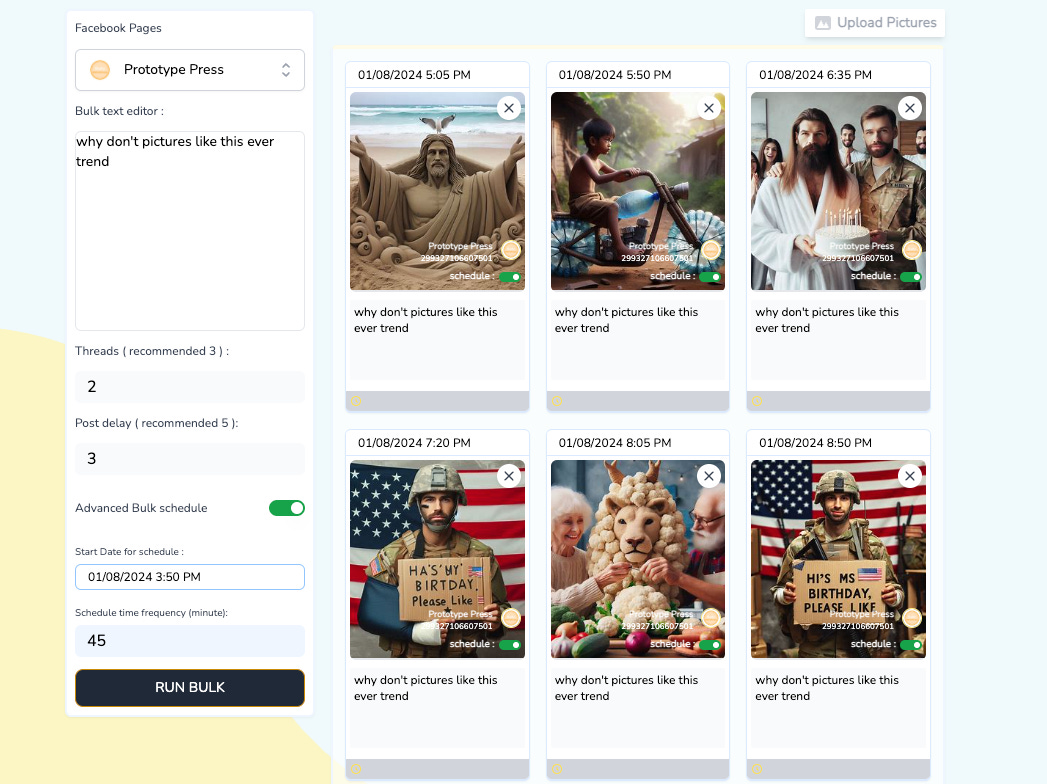

Jason Koebler at 404 Media has been on the Facebook AI slop beat longer than most (🫡, Jason). On Tuesday, he dropped a must-read deep dive on the influencers teaching others how to make money flooding Facebook pages with crappy images generated on Bing Image Creator with prompts like “A African boy create a car with recycle bottle forest.” One slopmaster allegedly made $431 “for a single image of an AI-generated train made of leaves.”

I think this article is to AI slop what Craig Silverman’s piece on Macedonian teens was to fake news in 2016, because it reveals that behind the wasteland of Facebook’s Feed is a motley crew of enterprising go-getters making algorithm-pleasing low-quality content in an attempt to make an easy buck.

IMANE’S MEANING

The controversy over Olympian Imane Khelif has been widely covered, so I’ll only share three quick things. First, this AP News article about the International Boxing Association, the discredited association that seeded the doubts about her sex and chaotically failed to produce any evidence. Second, the “unacceptable editorial lapse” that led The Boston Globe to misgender Khelif. And finally, a glimmer of hope: “fact” and “fact-checking” were top rising trends related to Google Search queries about the Algerian athlete over the past week, suggesting many folks around the world are just trying to figure out what’s going on:

HOW FACT-CHECKERS USE AI

Tanu Mitra and Robert Wolfe at the University of Washington interviewed 24 fact-checkers for a preprint on the use of generative AI in the fact-checking process. I couldn’t help but think that the use cases described — mostly around classifying or synthesizing large sources of information — are incremental rather than transformative.

Keep reading with a 7-day free trial

Subscribe to Faked Up to keep reading this post and get 7 days of free access to the full post archives.